Claude 3.5 Sonnet vs GPT-4o: Context Window and Token Limit

Artificial intelligence (AI) language models have become indispensable tools across industries, enabling applications ranging from customer support to academic research and software development. Among the latest advancements in this domain, Claude 3.5 Sonnet by Anthropic and GPT-4o by OpenAI stand out as two prominent models. Both models are designed to handle a wide range of tasks, but they differ significantly in terms of their context window and token limit—two critical parameters that influence their performance, versatility, and suitability for specific use cases. This report provides a detailed comparison of these two models, focusing on their context window and token limit capabilities, and evaluates their strengths, weaknesses, and ideal applications.

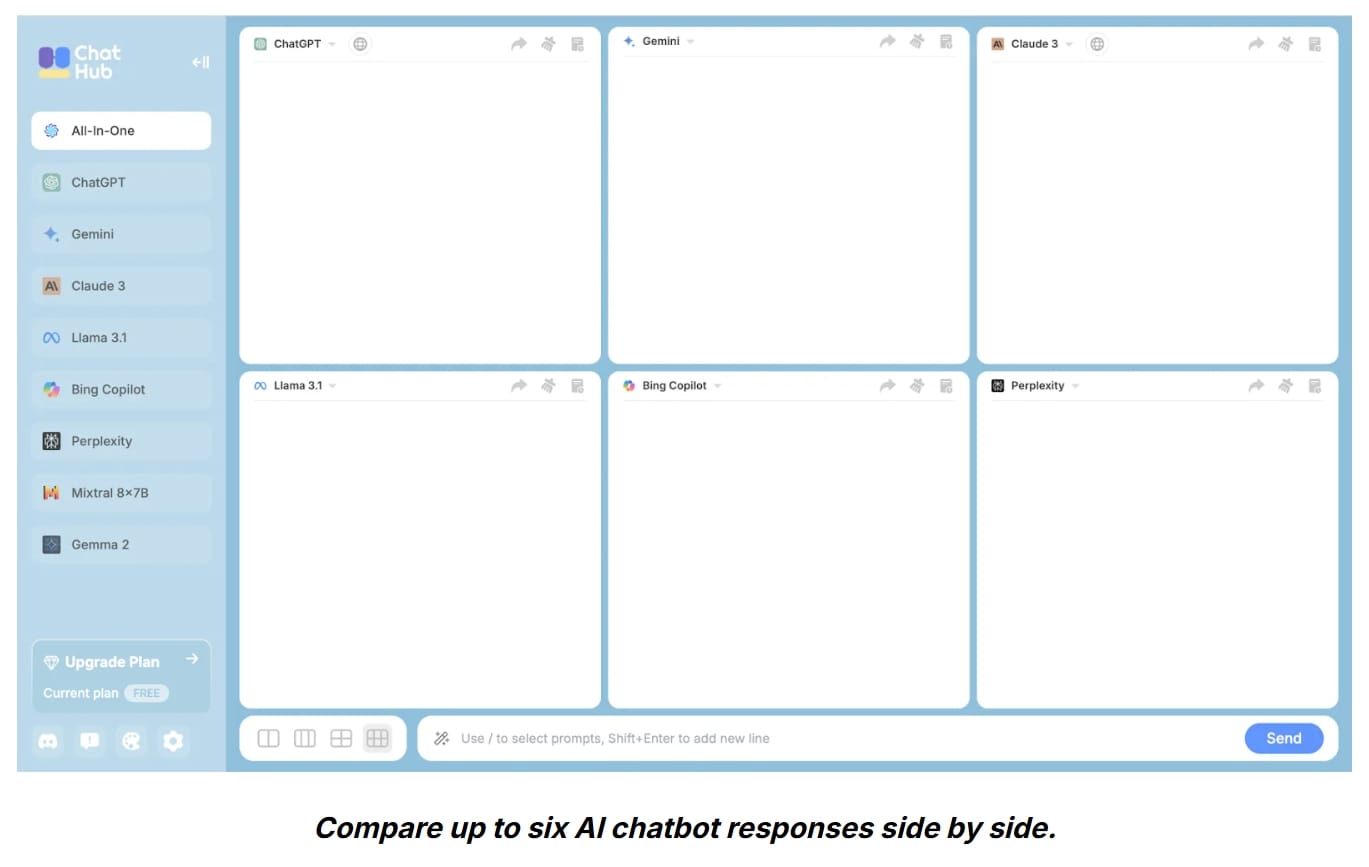

Of course, you can also use the super AI model comparator provided by Oncely.com: ChatHub.

ChatHub is an all-in-one browser extension designed to revolutionize your AI chatbot experience by enabling simultaneous use of multiple AI chatbots, including ChatGPT, Gemini, Claude, and more.

With ChatHub, you can use Claude 3.5 Sonnet and GPT-4o on the same platform to compare their differences more intuitively and clearly.

Oncely.com now offers a $37 lifetime subscription for ChatHub. Pay once, use forever, and provide a 60-day full money-back guarantee.

You can also visit Oncely.com to find more Top Trending AI Tools. Oncely partners with software developers and companies to present exclusive deals on their products. One unique aspect of Oncely is its “Lifetime Access” feature, where customers can purchase a product once and gain ongoing access to it without any recurring fees. Oncely also provides a 60-day money-back guarantee on most purchases, allowing customers to try out the products and services risk-free.

Oncely is hunting for the most fantastic AI & Software lifetime deals like the ones below or their alternatives:

Understanding Context Windows and Token Limits

What Are Context Windows and Token Limits?

A context window refers to the amount of text a language model can "remember" or process in a single interaction. It determines how much prior information the model can retain to generate coherent and contextually relevant responses. A larger context window allows the model to handle longer conversations or documents without losing track of earlier content.

Token limits, on the other hand, define the maximum number of tokens (units of text) that a model can process in a single sequence. Tokens can represent words, punctuation marks, or even parts of words, depending on the tokenization method used by the model. For example, the sentence "AI is transforming the world!" might be tokenized as six tokens: "AI," "is," "transforming," "the," "world," and "!". The token limit directly impacts a model’s ability to generate long-form content, maintain coherence, and handle complex tasks.

Claude 3.5 Sonnet: Context Window and Token Limit

Context Window

Claude 3.5 Sonnet boasts a massive context window of 200,000 tokens, making it one of the most advanced AI models in terms of memory capacity. This extensive context window enables the model to process and retain a vast amount of information over long interactions. For example, it can analyze lengthy legal documents, large datasets, or complex codebases without losing track of the overarching context.

This capability is particularly advantageous for tasks that require maintaining context over extended periods, such as:

- Legal document analysis: Processing contracts, case files, or regulations.

- Academic research: Generating comprehensive reports from large datasets.

- Customer support: Managing multi-step workflows and context-sensitive queries.

Token Limit

Claude 3.5 Sonnet also supports an output token limit of 4,096 tokens, meaning it can generate detailed and nuanced responses within a single sequence. While the output token limit is smaller compared to GPT-4o, the model compensates for this with its unparalleled context window size, which ensures that it can handle complex tasks requiring extensive context retention.

GPT-4o: Context Window and Token Limit

Context Window

GPT-4o features a context window of 128,000 tokens, which, while smaller than Claude 3.5 Sonnet's, is still substantial. This context window allows GPT-4o to handle moderately long interactions and documents effectively. For example, it is well-suited for creative content generation, complex reasoning tasks, and moderately extended conversations.

Although GPT-4o's context window is smaller than Claude 3.5 Sonnet's, it strikes a balance between memory capacity and computational efficiency. This makes it a practical choice for applications where extremely large context windows are not a necessity.

Token Limit

GPT-4o offers a higher output token limit of 16,384 tokens, allowing it to generate more detailed and elaborate responses within its context window. This feature makes GPT-4o particularly effective for tasks that require nuanced and lengthy outputs, such as:

- Creative writing: Generating stories, scripts, or articles.

- Technical documentation: Producing detailed manuals or guides.

- Complex reasoning: Solving intricate problems or answering multi-faceted queries.

However, as the input approaches the maximum context window size, GPT-4o's performance may degrade, leading to increased hallucinations and reduced recall accuracy. This necessitates careful management of input and output tokens to optimize the model's effectiveness.

Comparative Analysis: Claude 3.5 Sonnet vs GPT-4o

Context Window

Claude 3.5 Sonnet's 200,000-token context window is significantly larger than GPT-4o's 128,000-token context window. This gives Claude 3.5 Sonnet a clear advantage in applications that require processing large volumes of text or maintaining context over extended interactions. For instance:

- Legal and academic research: Claude 3.5 Sonnet can analyze entire books or datasets in one sequence, whereas GPT-4o might require segmentation of the input.

- Codebase management: Claude 3.5 Sonnet is better suited for handling large-scale software projects, where maintaining long-term context is crucial.

However, GPT-4o's context window is still substantial and sufficient for most use cases, especially those that do not require processing extremely large inputs.

Token Limit

In terms of output token limit, GPT-4o outperforms Claude 3.5 Sonnet with a maximum of 16,384 tokens compared to Claude's 4,096 tokens. This makes GPT-4o more suitable for tasks that demand detailed and lengthy outputs, such as:

- Writing long-form content like novels or technical guides.

- Generating comprehensive responses to complex queries.

On the other hand, Claude 3.5 Sonnet's smaller output token limit is offset by its larger context window, which ensures coherence and context retention over long sequences.

Performance and Efficiency

Claude 3.5 Sonnet's larger context window comes at the cost of higher computational requirements and processing times. This makes it less efficient for tasks that do not require its full capacity. In contrast, GPT-4o is designed to balance performance with resource efficiency, making it a more cost-effective option for many applications.

Application Suitability

Claude 3.5 Sonnet

Claude 3.5 Sonnet is ideal for:

- Legal and academic research: Processing large documents and generating comprehensive reports.

- Customer support: Managing multi-step workflows and maintaining context over long interactions.

- Software development: Handling large codebases and ensuring consistency across projects.

GPT-4o

GPT-4o is better suited for:

- Creative content generation: Writing stories, scripts, and articles.

- Technical documentation: Producing detailed manuals or guides.

- Cost-sensitive applications: Balancing performance with resource efficiency.

Conclusion

The choice between Claude 3.5 Sonnet and GPT-4o ultimately depends on the specific requirements of the task at hand. Claude 3.5 Sonnet's 200,000-token context window makes it the superior choice for applications that demand extensive context retention and the ability to process large volumes of text. In contrast, GPT-4o's higher output token limit and cost-efficiency make it a practical option for tasks that require detailed outputs but do not necessitate an extremely large context window.

Both models offer unique strengths and cater to different use cases, making them valuable tools in the AI landscape. Developers and businesses should carefully evaluate their needs and priorities to select the model that best aligns with their objectives.