Understanding ChatGPT's Use of Customer Prompts

ChatGPT, an advanced AI language model, has revolutionized customer service by enabling businesses to enhance customer interactions through automated, human-like responses. The effectiveness of ChatGPT in customer service, however, depends heavily on the quality of prompts provided. A well-crafted prompt can yield precise, relevant, and actionable responses, while poorly constructed prompts may result in vague or irrelevant outputs. This report explores the use of ChatGPT in customer service and outlines six best practices for creating effective prompts. The insights presented are based on a detailed analysis of multiple sources, including recent articles and guides on ChatGPT prompting strategies.

ChatGPT's Role in Customer Service

ChatGPT has emerged as a transformative tool for customer service, enabling businesses to automate routine tasks, enhance customer engagement, and improve overall efficiency. By leveraging AI-driven responses, companies can handle high volumes of customer inquiries, provide multilingual support, and personalize interactions. For instance, ChatGPT can assist with tasks such as:

- Generating quick replies to frequently asked questions.

- Crafting personalized responses for customer queries.

- Analyzing customer sentiment and feedback.

- Creating troubleshooting guides and chatbot scripts.

You can also visit Oncely.com to find more Top Trending AI Tools. Oncely partners with software developers and companies to present exclusive deals on their products. One unique aspect of Oncely is its “Lifetime Access” feature, where customers can purchase a product once and gain ongoing access to it without any recurring fees. Oncely also provides a 60-day money-back guarantee on most purchases, allowing customers to try out the products and services risk-free.

Oncely is hunting for the most fantastic AI & Software lifetime deals like the ones below or their alternatives:

The Importance of Effective Prompts

Prompts are the backbone of ChatGPT's functionality. A prompt is essentially an instruction or query that guides the AI to generate a response. The quality of the response is directly proportional to the clarity, specificity, and context of the prompt. For example, a vague prompt like "Tell me about customer service" may yield a generic response, whereas a specific prompt like "Provide three tips for improving customer satisfaction in e-commerce" will generate a more targeted and actionable reply.

Effective prompts can help businesses achieve the following:

- Improve Response Accuracy: Clear and specific prompts reduce ambiguity, ensuring that ChatGPT generates relevant responses.

- Save Time: Well-crafted prompts minimize the need for follow-up questions, streamlining customer interactions.

- Enhance Customer Satisfaction: By providing precise and helpful responses, businesses can foster trust and loyalty among customers.

6 Best ChatGPT Prompts for Customer Service

1. Analyzing Customer Feedback Trends

Prompt Example:

"Using historical data from the past [time period], what are the emerging trends in customer satisfaction levels in the [industry] industry, and how can we improve areas with declining satisfaction rates?"

Application:

This prompt is designed to analyze customer feedback and identify patterns or trends in satisfaction levels. By feeding ChatGPT with historical data, businesses can gain insights into areas where customer satisfaction is declining and develop targeted strategies for improvement.

Benefits:

- Identifies pain points in customer interactions.

- Helps prioritize areas that require immediate attention.

- Provides actionable recommendations for enhancing customer satisfaction.

2. Tailoring Customer Experience

Prompt Example:

"How can we customize our customer experience strategy to cater to [specific customer segment] based on their preferences and behavior?"

Application:

This prompt enables businesses to create personalized experiences for specific customer segments. By analyzing customer behavior and preferences, ChatGPT can suggest tailored strategies that resonate with the target audience.

Benefits:

- Increases customer loyalty and retention.

- Enhances the overall customer experience.

- Drives higher engagement and satisfaction levels.

3. Resolving Customer Escalations

Prompt Example:

"As a senior expert in escalation management, analyze the following customer escalation case and provide a step-by-step conflict resolution strategy that respects the company’s values while ensuring customer satisfaction: [Insert Case Details]."

Application:

This prompt is particularly useful for handling complex customer issues that have been escalated. By simulating the expertise of a senior escalation manager, ChatGPT can provide structured and professional solutions to resolve conflicts.

Benefits:

- Ensures swift and effective resolution of escalations.

- Maintains the company’s reputation by handling issues professionally.

- Builds trust and confidence among customers.

4. Customer Retention Strategies

Prompt Example:

"Based on the following customer churn data, suggest strategies to improve retention rates and foster long-term customer relationships: [Insert Data]."

Application:

This prompt focuses on analyzing customer churn data to develop strategies for retaining customers. By identifying the reasons behind customer attrition, businesses can implement measures to improve retention rates.

Benefits:

- Reduces customer churn and increases loyalty.

- Identifies key factors contributing to customer attrition.

- Provides actionable insights to improve retention strategies.

5. Training Customer Support Teams

Prompt Example:

"Based on common customer pain points such as [Insert Pain Points], create a training script for our support team to handle these issues effectively."

Application:

This prompt is ideal for developing training materials for customer support teams. By addressing common pain points, ChatGPT can create scripts that equip support agents with the skills and knowledge needed to handle customer issues efficiently.

Benefits:

- Improves the quality of customer support.

- Reduces average handling time and increases resolution rates.

- Enhances the confidence and competence of support agents.

6. Personalization Strategies

Prompt Example:

"Provide strategies for offering a personalized experience to customers who [Insert Specific Behavior or Preference]."

Application:

This prompt helps businesses create personalized strategies based on specific customer behaviors or preferences. By leveraging ChatGPT's ability to analyze data, businesses can offer customized solutions that enhance the customer experience.

Benefits:

- Increases customer engagement and satisfaction.

- Builds stronger relationships with customers.

- Drives higher conversion rates and revenue.

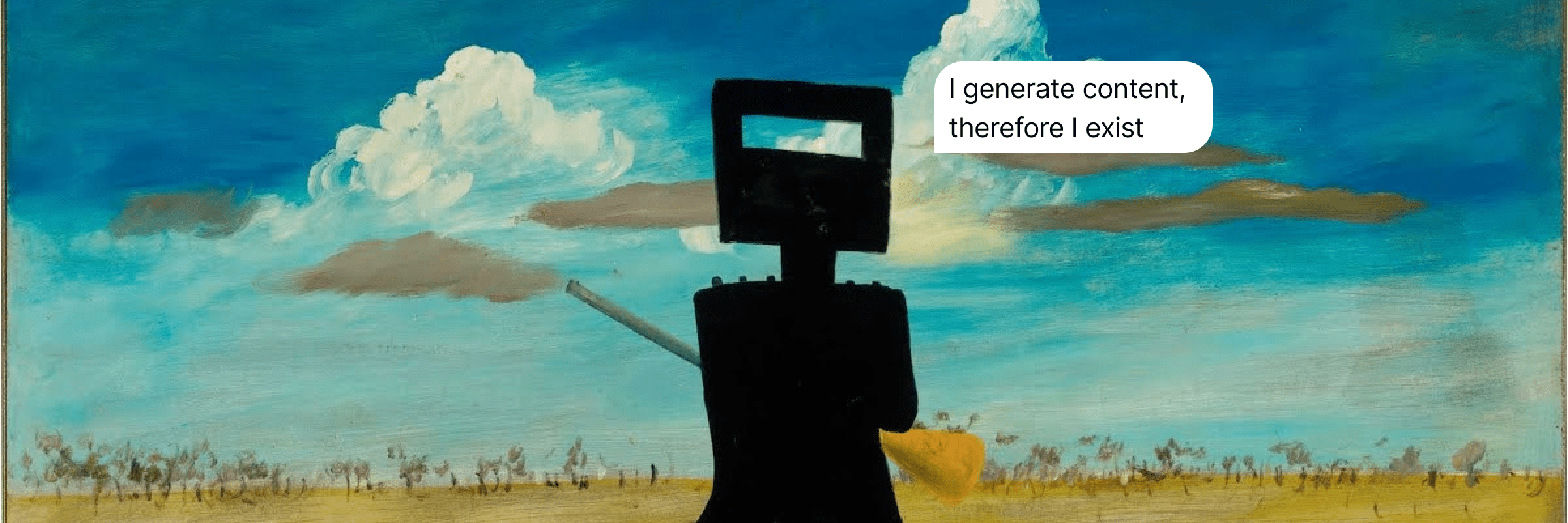

Simplifying Your Path to AI Interaction

If you want to create more high-quality prompts, but the results are not satisfactory due to lack of expertise.

Introduce Prompt Whisperer, this AI tool boosts creativity and productivity by generating context-aware prompts using advanced AI. It aids content creators, students, and professionals in overcoming writer's block and brainstorming. Its user-friendly interface allows anyone to create tailored content, enhancing writing and creativity.

Oncely.com now offers a $37 lifetime subscription for Prompt Whisperer. Pay once, use forever, and provide a 60-day full money-back guarantee